Ever Wonder How Facial Recognition Works?

We do too. By now we’ve posted a few articles on Eye Tracking Update that mention face detection technologies being employed for security measures. It’s something we read about more often these days in news stories on counterterrorism and homeland security. But how does face detection actually work? A recent post that, oddly enough, covered various makeup patterns meant to hide from face detection actually pointed us to an interesting article that first appeared in SERVO Magazine back in February of 2007.

We do too. By now we’ve posted a few articles on Eye Tracking Update that mention face detection technologies being employed for security measures. It’s something we read about more often these days in news stories on counterterrorism and homeland security. But how does face detection actually work? A recent post that, oddly enough, covered various makeup patterns meant to hide from face detection actually pointed us to an interesting article that first appeared in SERVO Magazine back in February of 2007.

When you use a facial detection application, chances are you’re actually using an algorithm from 10 years ago. The algorithm, created in 2001 and originally developed by Paul Viola and Michael Jones (and is typically known as the Viola-Jones method or even just Viola-Jones), is centered around the combination of a few key concepts. One of these concepts is called a Haar feature.

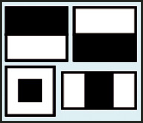

These simple rectangular features are single wavelength square waves with one high interval and one low. In two dimensions, the square wave looks like a pair of rectangles, one light, one dark, and both adjacent to each other. The actual rectangular combinations used for visual object detection are not true wavelets, but illustrations better suited to visual recognition tasks. That’s why they’re known as Haar “features” (or Haarlike features) as opposed to true wavelets.

Now think of a face. There are various zones, features, protrusions, shadows, highlights, etc. Each of these can be translated to what is essentially a rectangular illustration – sort of a pixilation of the features located on the human face. These patterns make up the contrasting pixels that determine the identity of an image.

The presence of a Haar feature is derived when you subtract the average dark-region pixel value from the average light-region value. If the result is above a certain threshold (which varies depending on what stage of learning the algorithm is set to – read on) that feature is determined to be present.

Paul Viola and Michael Jones were able to determine the presence or absence of hundreds of Haar features for imaging using a technique called Integral Image. Basically, small units are added together to create a sum total, or integral value. For face detection, these units are the sum pixel values of the surrounding pixels on all sides. Starting at the top left and traversing right and down, the entire image can be integrated with the algorithm ultimately determining the presence of a face.

Once the algorithm worked, Viola and Jones needed to train it. Cycling through a large bank of example faces, they used a machine-learning method that combined many “weak” classifiers to create one “strong” one. A “weak” classifier only gets the right answer a little more often than random guesswork. But with an ample amount of weak classifiers, patterns and tendencies can be seen, and eventually you’d have a strong, combined force – a “strong” classifier.

The two researchers used a database of about a thousand individual faces, breaking each down into patterns within the Haar features. Initially, the acceptance threshold of each level is set low enough so that most face examples are recognized while training. As each image is observed, the program classifies it as a face or not (“not face”) and then moves on to the next, and as it learns to recognize faces more successfully , the acceptance threshold is increased.

No related articles.